Monday, December 8, 2008

Cloud Computing Predictions for 2009

1- Clouds reduce the effect of the recession.

The basic argument being that since cloud computing is a more cost effective means to obtain IT services, cloud computing enables the IT budget to go further. But that would simply take money away from the IHVs and big consultancies, so a more careful study would need to be made to assert if this is zero-sum game or not. My thought here would be that the recession may accelerate the adoption of cloud computing so that consumers of IT spent less, but it will hurt the IHVs.

2- Broader depth of clouds

This prediction is the simple progression of a new technology that is getting adopted. More customers are coming in and all have slightly different requirements that the cloud providers will cater to. It is easier to do that with specialized solutions and thus we'll see a broadening of the features offered in clouds.

3- VC, money & long term viability

This is an interesting prediction from Michael: cloud aggregators will be funded and the other players in the stack will get squeezed. Cloud aggregators are companies like RightScale and Cassatt and there is no doubt in my mind that they will do well since leveraging cloud computes is still hard work. I personally think that the VCs are not going to play in this space because of the presence of large incumbents like IBM, Amazon, Google, HP, and Sun. Personally, I think the real innovation investments will come from the emerging markets since they have the most to gain from lower IT costs.

4- Cloud providers become M&A targets

This item reads as a prediction that the consolidation in the cloud space will accelerate in 2009. My prediction is contrarian in the sense that I think we'll see more specialized clouds show up to cater to very specific nitches and thus we'll see a market segmentation first before we'll see a consolidation. For example, most clouds are web application centric, and putting up a web server is one feature that is widely supported. However, the financial industry has a broader need than just web servers, as do product organizations like Boeing and GE. I think there is a great opportunity to build specialized clouds for those customers as it can be piggy backed on supply chain integration so players like Tibco can come in. That is a very large market with very high value: much more interesting than a little $49/month hosted web server.

5- Hybrid solutions

On-premise and cloud solutions working together. That prediction is more of a looking back but it is a sign that cloud computing is accepted and companies are actively planning how to leverage this new IT capability in their day to day operation.

6- Web 3.0

More tightly integrated Web 2.0? It clearly is all about the business or entertainment value. I really like what I am seeing in the data mining space where knowledge integration is creating opportunities for small players with deep domain experts to make a lot of money. Simply take a look at marketing intelligence: the most innovative solutions come from tiny players. I think this innovation will drive cloud computing for the next couple of years since it completely levels the playing field between SMBs and large enterprise. This make domain expertise more valuable and the SMBs are much more nimble and can now monetize that skill. Very exciting!

7- Standards and interoperability

Customers will demand it, incumbent cloud providers will fight it. I can't see Google and IBM giving up their closed systems so the world will add another ETL layer to IT operations and spring to live some more consultants.

8- Staggered growth

A simple prediction that everything cloud will expand.

9- Technology advances at the cloud molecular level

This is an item dear to my heart: cloud optimized silicon. It is clear that a processor that works well in your iPhone will not be the right silicon for the cloud. There are many problems to be solved in cloud computing that only have a silicon answer, so we are seeing fantastic opportunities here. This innovation will be attenuated by the lack of liquidity in the western world but this provides amazing opportunities for the BRIC countries to develop centers of excellence that surpass the US. And 2009 will be the key year for this possible jump since the US market will be distracted trying to stay in cash till clarity improves. As they say, fortunes are made in recessions.

10- Larger Adoption

A good prediction to end with for a cloud computing audience: business will be good in 2009.

Sunday, December 7, 2008

Comparing the Cost Continued...

The regression workload can be generated by a software design team developing a new application, a financial engineering team back testing new trading strategies, or a mechanical design team designing a new combustion engine that runs on alternative fuels.

The technical workload can be a new rendering algorithm to model fur on an animated character, or a new economic model that drives critical risk parameters in a trading strategy, or an acoustic characterization of a automobile cabin.

The first workload is characterized by a collection of tests that are run to guarantee correctness of the product during development. Our test case for a typical regression run is a 1000 tests that run at an average of 15 minutes each. Each developer typically runs two such regressions per day, and for a 50 person design team this yields 100 regression runs per day. The total workload equates to roughly 1050 cpu hours per hour and would keep a 1000 processor cluster 100% occupied.

The second workload shifts the focus from capacity to capability. The computational task is a single simulation that requires 5 cpu hours to complete. The benchmark workload is the work created by a ten person research team that runs five simulations per day. Many of these algorithms can actually run in parallel and such a task could run in 30 minutes when executed in parallel on ten processors. Latency to solution is a major driver on R&D team productivity and this workload would have priority over the regression workload particularly during the work day. The total workload equates to roughly 31 cpu hours per hour because this workload runs just in the eight hour work day.

Running these two workloads on our cloud computing providers we get the following costs per day:

| Benchmark | Amazon | Rackspace/Mosso |

|---|---|---|

| Regression Workload | $25,075.17 | $18,250.25 |

| Knowledge Discovery | $265.09 | $230.13 |

The total cost of $20-25k per day makes the regression workload too expensive for outsourcing to today's cloud providers. A 1000 processor on-premise x86 cluster costs roughly $10k/day including overhead and amortization. The cost of bulk computes like the regression workload needs to go down by at least a factor of 5x before cloud computing can bring in small and medium-sized enterprises. However, the technical workload at $250/day is very attractive to move to the cloud since this workload is periodical with respect to the development cycle and it moves CapEx to OpEx to frees up capital for other purposes.

The big cost difference between Rackspace/Mosso and Amazon is the Disk I/O charge. It doesn't appear that Rackspace monetizes this cost. From the cost models, this appears to be a liability for them since the Disk I/O cost (moving the VM image and data sets to and from disk) represents roughly 20% of the total costs. Fast storage is notoriously expensive so this appears to be a weakness of Rackspace.

In a future article we will dissect these costs further.

Comparing Costs of Different Cloud Computing Providers

Many activities at the US National Labs are directed to evaluate if it is cost effective to move to AWS or similar services. To be able to compare our results to that research we decided to map all costs into AWS compatible pricing units. This yielded the following very short list:

| Provider | CPU $/cpu-hr | Disk I/O $/GB | Internet I/O $/GB | Storage $GB-month |

|---|---|---|---|---|

| Amazon | $0.80 | $0.10 | $0.17 | $0.15 |

| Rackspace/Mosso | $0.72 | $0.00 | $0.25 | $0.50 |

The reason for the short list is that there are very few providers that actually sell computes. Most of the vendors that use the label cloud provider are actually just hosting companies of standard web services. Companies like 3Tera, Bungee Labs, Appistry, and Google cast their services in terms of web application services, not generic compute services. This makes these services not applicable to the value-add computes that are common during the research and development phase of product companies.

In the next article we are going to quantify the cost of different IT workloads.

Thursday, October 23, 2008

Dynamic IT

CIO used the broad definition of cloud computing: "a style of computing where massively scalable IT-related capabilities are provided 'as a service' using Internet technologies to multiple external customers". Other terms used are "on-demand services", "cloud services", "Software-as-a-Service".

The survey confirmed that cloud computing is a solution to the need for flexibility in IT resource management. IT needs flexibility and cost savings, but is unwilling to jump in with both feet until some lingering concerns are addressed: the top concern being security.

Cloud computing will be used in many pilot/proof-of-concept projects by the incumbents, and it will be experimented with as full blown business models by a growing cadre of start-ups. We have described this many times in this blog that the cloud computing model will be driven by the small and medium business segment because they value cost savings over security or SLAs. And typically with technologies that offer dramatic cost savings, when successful, there will be carnage among the companies that are holding on too tightly to old fashioned business models.

Monday, October 6, 2008

Bluehouse is in public beta

Willy Chiu, VP at IBM of High Performance On-Demand Solutions stated: "We are moving our clients, the industry and even IBM itself to have a mixture of data and applications that live in the data centre and in the cloud." IBM's approach is to expand its cloud computing offerings through a 'four-pronged strategy':

In addition to Bluehouse, IBM is also rolling out a handful of web services. Policy Tester On-Demand will automate the scanning of web content to ensure that it complies with industry legislation, and AppScan On-Demand will scan web applications for security bugs. Sean Poulley, VP of Online Collaboration Services compared the Bluehouse tools to those of Microsoft and Google: "Whereas Microsoft is document centric and Google is email centric, our solution is a mixture of both".

IBM's $400M investment in a new data center to support this new mid-market SaaS/Cloud Computing services portfolio, brings another large player into the mix. These tools have been a long time coming but with every major brand now on-line, the branding wars can begin.

Thursday, October 2, 2008

Windows Server on Amazon EC2

According the Vogels' blog the area that accelerated to adoption of this functionality in Amazon's Elastic Cloud was the entertainment industry due to the wide range of excellent codecs available for Windows. Here is the power of Microsoft's dominance of the client side translating into a huge benefit for cloud adoption. With 20-20 hindsight, the benefit of quality codecs is now obvious, and it will drive very quick adoption of Windows in the Cloud. Content apparently is still king and thus the conduit that delivers it is a critical component. Turns out that Microsoft does have an unfair advantage in the Cloud space.

Wednesday, October 1, 2008

Red Dog and Windows Cloud: Microsoft is coming!

Enter HPC, cluster, and cloud computing: so far this has been driven by Linux mainly because there have been no commercial offerings that solve the problem of pedal-to-the-metal applications that need tight integration with the underlying hardware and operating system services such as memory, communication stacks and I/O.

For a decade now, Google has blazed the way with web-scale hardware and software infrastructures that are showing their true value. And now Amazon Web Services is also offering an IT-for-rent model that is perfect for web based services. Detrimental to Microsoft, Google and Amazon Web Services do not enable any Microsoft application software, operating systems, development tools, or even web services. Clearly, this is moving momentum away from the Microsoft universe and they have to counter to stay relevant.

Red Dog appears to be the first salvo across developers bows that Microsoft is coming. Red Dog is Microsoft's IT-for-rent story, as an answer to Linux centric Amazon Web Services. The second shot is dubbed "Windows Cloud". It is a development environment for Internet-based applications, as an answer to Python centric Google Gears.

Given Microsoft's track record to build very productive development environments that have the hearts of most internal IT shops, I am confident that this will accelerate the Cloud Computing adoption in the mid-market.

Monday, September 8, 2008

Google and corporate espionage

But first, the EULA flap. The old EULA stated: "You retain copyright and any other rights you already hold in Content which you submit, post or display on or through, the Services. By submitting, posting or displaying the content you give Google a perpetual, irrevocable, worldwide, royalty-free, and non-exclusive license to reproduce, adapt, modify, translate, publish, publicly perform, publicly display, and distribute any Content which you submit, post, or display on or through, the Services."

Clearly, Google didn't mess this up: they are a big company with a good legal team, so we have to assume that this EULA was deliberately written the way it was. Since Google is a conduit for content, while using this content for its own profit without wanting to pay for it, the EULA makes a lot of sense from Google's perspective. Equally clear is that asking for a "...perpetual, irrevocable, worldwide, royalty-free, and non-exclusive license to reproduce, adapt, modify... publish" is simply unbelievably aggressive leading to a revolt that forced Google to rewrite the EULA.

For Google's business model to remain viable, it has to extract customer behavior and owning the browser makes that a million times easier. Secondly, searching for information on the internet is essential to today's business, so Google has both customer behavior and customer knowledge searches. This means that it can deduce purpose and effectively learn what you learn. Finally, collective knowledge of your workforce adds a whole new layer of understanding for Google. For example, say you have decided to plan for a new product. The product research your organization is doing will be localized in time and in scope, thus making it easy to filter out of the backdrop of all other searches that your organization is doing. This means that your new product plans will be visible to Google and its clever group of data mining specialists. Their whole job is to mine for data like this so that the Google service can provide you with contextual information that you could use and thus will generate revenue for Google. Google simply needs to know more about your business than you do to continue to generate revenue.

Google of course is not unique in this respect. Any ad-revenue driven Services will need some spying and semantic inference to yield context to generate revenue. In the consumer space, it appears that people are more willing to part with their preferences in exchange for free access. But the cost for business seems a bit steep, particularly big business, and it comes as a surprise to me that only the media companies have been suing Google. Maybe the Google EULA flap will invigorate the debate on how much data a SaaS or Cloud provider can extract and own and how this needs to be regulated.

Sunday, August 17, 2008

IT as a Business: or IaaB

Jeff Barr, a Web Services Evangelist at Amazon, just published some interesting data that is supporting the observation that IT operation outsourcing is being leveraged aggressively. It also shows that this switch is happening incredibly quickly. For those in the business, this is not surprising because our customers have been screaming for more performance/capacity for a decade, mostly because the processor vendors such as Intel and IBM have not been able to provide anywhere near the performance improvements required to keep up with the data explosion.

From Amazon's 4th quarter earnings call, TechCrunch reports that the businesses that are taking advantage of IT operation outsourcing are not just tiny little start-ups:

"So who are using these services? A high-ranking Amazon executive told me there are 60,000 different customers across the various Amazon Web Services, and most of them are not the startups that are normally associated with on-demand computing. Rather the biggest customers in both number and amount of computing resources consumed are divisions of banks, pharmaceuticals companies and other large corporations who try AWS once for a temporary project, and then get hooked."

The value that is created by the on-demand capacity inherent to Cloud Computing is the big differentiator here for both startup and established business. For startups the value is inherent to the service, but for the mid-market it is on-demand capacity. To understand this, one must realize that most compute problems are bursty: it takes humans time to formulate experiments and setup the automation, but from that point on compute capacity and performance are the critical path.

In today's shift towards extracting more value out of operational business data, the mid-market is about to embark on a whole new degree of productivity; Cloud Computing and the pay-as-you-go business model removes IT operation, both CapEx and OpEx, as the limiter for all business: small, medium, and large. With this type of value creation, the switch-over can happen dramatically quickly.

Saturday, August 16, 2008

HPC is dead, long live HPC!

If you look at the fortunes of pure HPC providers like Silicon Graphics and Cray it is obvious that corporate America has not been motivated by HPC vendor's marketing messages entailing the goodness of HPC for American's competitiveness. The only outfit that seems to keep these companies afloat is the NSA. This is a trend that has been documented for many years now at the Council on Competitiveness.

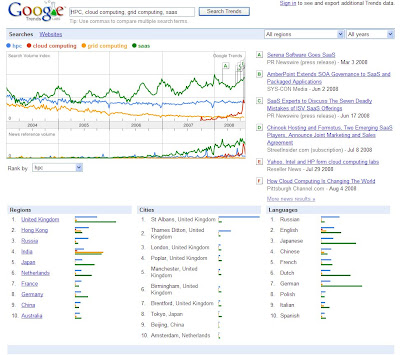

A quick Google trends query shows that just when everybody is becoming an HPC consumer, the term is slowly loosing its luster in favor of more business friendly terms like Cloud Computing and SaaS. SaaS in particular will force the provider to leverage HPC technologies such as clusters and distributed computing.

You can keep track of these terms here.

You can keep track of these terms here.The data also shows that HPC interest and innovation has shifted away from the US to Europe and the far east. Organizations like India's Tata are building and operating world-class HPC installations. Even Sweden is on the top 5 list. It makes a lot of sense for Russia, China, and India to jump on this: they have very little inertia and they understand that moving up into the value chain is the next step of their evolution to play in the global economy.

Thursday, August 14, 2008

SaaS Business Process Outsourcing Buying Attitude

The first step in the survey was to gauge how familiar people are with SaaS. 31% indicated that they considered themselves knowledgable, whereas 50% felt less comfortable, and 19% had never heard of the term.

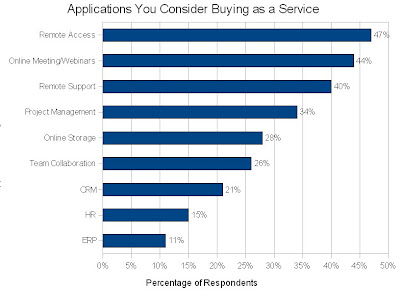

When asked which top five categories of applications they would consider purchasing as a service, Remote Access was number one (given that Citrix commissioned this study that is hardly surprising). The full rankings are shown in the following graph:

That only 15% of the respondents thought the SaaS model to be more attractive than on-premise software deployment is very telling. It shows that most people can't phantom switching processes/vendors/applications, and this is understandable. Very few software vendors make it easy to get data out of their systems, so creating the automation and validation procedures to transition is too much for many SMEs. Secondly, from a risk point of view, providing continuity during the switch-over is a daunting task, and something that most executives would rather avoid till the old system is so broken that it hurts. This is typically when the organization runs into financial trouble at which point reinvesting in something better is kinda the wrong time. Still, all understandable

Most SaaS providers understand this dilemma and provide lots of tools to serialize data from and to different systems. There are also plenty of third party solutions that make this process much more palatable. Cast Iron Systems is such a provider which allows on-premise and on-demand solutions for these ETL tasks.

Wednesday, August 13, 2008

SaaS aggregation and experimentation

"We are now at point where implementors of SaaS capabilities are being disrupted by newer SaaS capabilities. Services that are built largely from other services are a reality, and offer many clear advantages. The types of services that could be used in the creation of new services span the spectrum, from base infrastructure services to complementary high-level application services that can be composed or mashed up. Example services include: compute and storage services; DB and message-based queuing services; identity management services; log analysis and analytic services; monitoring and health management services, payment processing services; e-commerce services like storefronts or catalogs; mapping services; advertisement services; in addition to the more well-known business application services like CRM and accounting.

The move to SaaS applications built on SaaS is a much more profound shift than the move from on-premise applications to SaaS applications. The software industry is beginning to display characteristics that mimic the supply chains and service layering that are commonplace in other industries like transportation, financial services, insurance, food processing, etc. A simple set of categories like applications, middleware and infrastructure no longer represents the reality of software products or vendors. Instead of a small number of very large, vertically integrated vendors, we are seeing an explosion of smaller, more focused software services and vendors. The reasons for this transition are simple: It takes less capital and other resources to create, integrate, assemble and distribute useful software capabilities."

In essence, with BIG companies like IBM, Google, and Amazon committing billions of dollars in infrastructure, SaaS start-ups and their financial backers can experiment very quickly with new business offerings and see what sticks. This is a dream for a venture capitalist: they don't need to be experts in understanding the business, they simply need to apply a portfolio approach to have their basis covered: this is typical MBA fare and thus very easily understood by today's VCs.

The reason these big companies are investing so heavily is because they believe that the future is SaaS and Cloud Computing. When infrastructure investments are taking place by multiple, large and international players, you know that the business dynamics are about to change.

Sunday, August 3, 2008

The Achilles' Heel of SaaS: the processor vendors Intel and IBM

All business SaaS workflows manage important information assets that need to be leveraged to its fullest extent for an organization to be competitive. The computes to leverage this information have exploded due to maturity of data base management systems but also due to the sheer availability of competitive information available on the internet. Most of the current database research is about query languages and systems for querying non-stationary data and federated systems. Fully automated machine learning systems like Google's web index are only the tip of the iceberg. Compute requirements for optimization techniques in inventory management and logistics are much more severe.

This explosion of compute requirements creates a huge problem. Since SaaS relies on multi-tenancy to offer profit margin for its provider the end user actually gets less hardware to work with. Secondly, the demand for computes handily outstrips the offerings by Intel and IBM. At the end of 2007, Google computes were up at the rate of 10,000 CPU years per month and that rate has been tripling every year since 2004. All this implies that we are in for a bumpy ride with SaaS providers having to scale out their data centers rapidly due to customer growth and compute demands and Intel and IBM not doing their part to keep up with the compute needs. This will inevitably lead to an increased rate of innovation on the hardware side that the successful software organizations will leverage to differentiate. Virtualization of these hardware assets will be the name of the game to remain nimble and not get stuck when you bet on the wrong horse.

Friday, August 1, 2008

Google and Amazon as Benchmarkers

The next innovation in benchmarking is when Google and Amazon jump in. Google has already started with Google Trends, but the real business value is held by online retailers like Amazon. Amazon as part of its own offering already demonstrates lots of benchmarking prowess in generating customer focused result pages that bring together your query and purchasing history and that of other shoppers that appear to be similar in context of your current query/purchase. For retailers this is so powerful. Amazon as a cloud computing provider has its platform perfectly positioned to attracked more specialized retailers that would not have the scale to do proper benchmarking. There is no doubt in my mind that Amazon will leverage this incredible information asset and make oodles of money.

Wednesday, July 30, 2008

The Goldman Sachs SaaS scorecard

In my mind, the issue of what cloud computing is or is not, is misguided to some degree and only useful for the software professionals that are leveraging the different offerings. And these folks should be sophisticated enough to recognize the different underlying technologies. From an end user perspective, what you can do with the end result of cloud computing is much more interesting. And in that regard, the offerings are much more easily understood. For example, using Google PicasaWeb or Smugmug to store your family's pictures on sharable, replicated, backed-up storage is an end user value you can measure.

In all this research I did come across a nice and simple scorecard that Goldman Sachs uses to educate its clients about one form of cloud computing: SaaS. Goldman Sachs uses the following characteristics to judge the value proposition of a SaaS vendor or on-premise ISV.

- Does the application provide value as a stand-alone workflow or does it require extensive integration with other applications?

- Integration is the biggest hurdle a SaaS provider encounters. When applications, and the workflows they automate, become more standardized with accepted APIs, this will become less of a hurdle but right now stand-alone value is the litmus test for success.

- Does the application represent an industry best-known-method or does it require extensive customization?

- Due to the fact that SaaS business models can only create value if they aggregate multiple users on the same software and/or hardware instance, customization dilutes the profitability.

- Is the application used by a distributed workforce and non-badge employees?

- This is clearly the driving force behind SaaS and other forms of cloud computing. In my mind, this is routed in a pattern that has been driving IT innovation for the past decade. Consumers have become accustomed to mobility of information in their personal lives. Universal access to email or travel itineraries is so natural that it is aggravating when corporate data systems don't provide the same sophistication. It is hard to have confidence in your IT department if they push applications on you that look and work horribly compared to the applications you use in your personal life.

- Does the data managed by the application cross a firewall?

- This is the security aspect of SaaS applicability. If much of the data comes from outside the firewall as aggregated information to help the business unit then this is a simple decision. Many B2B workflows have this attribute. If the data being manipulated are the crown jewels of the company, attributes such as security, SLA, accountability, rules and regulations become big hurdles for adoption.

- Does the application benefit from customer aggregation?

- This is the benchmarking opportunity of SaaS. If you host thousands of similar businesses then you have the raw data to compare these companies among each other, and possibly against industry wide known metrics.

- Does the application deployment show dramatically lower upfront costs than on-premise solution?

- Low upfront costs can defuse deployment resistance. This is very important attribute for a SaaS offering that is targeting the Small and Medium sized Business (SMB) segments.

- Does the application require training to make it productive?

- If it does it is bad news: internet applications need to do task extremely well particularly if your access to the application is through some seriously constrained interface like a smart phone or MID.

- Can the application be adopted in isolation?

- This is similar to the stand-alone requirement, but more focused on the procurement question for the SaaS solution. If the solution can be adopted by a department instead of having to be screened for applicability across the whole enterprise, it clearly will be easier to get to revenue.

- Is the application compute intensive/interactive?

- SaaS applications don't do well with large compute requirements mainly due to the multi-tenancy that is the basis of the value generation. If one customer interacts with the application and makes a request that pegs the server on which the application runs, all other customers will suffer.

Sunday, July 20, 2008

SaaS business development

Traditionally, the software acquisition process involves RFPs from vendors that are then run through some evaluation process leading to an acquisition. Money changes hands, and at that point, the business adoption starts. Software selection in this procurement-driven market is a matter of faith.

The open source community had to resort to a different approach. Simon Phipp's dubbed this the adoption-led market. The basic dynamic here is that developers try out different packages, often open source, to construct prototypes with the goal to create a deployable solution to their business problem.

It is clear that availability of open source solutions had to cross a certain critical mass of functionality and reliability before this market could develop. The LAMP stack was the first reliable infrastructure that was able to deploy business solutions, but now we have a great proliferation of functional augmentation to this stack that accelerates this adoption-led market.

Simon concludes in his blog entry:

Written down like that, it seems pretty obvious, but having a name for it – an adoption-led market – has really helped pull together explanations and guide strategy. For example:

- In a procurement-driven market you need to go out and sell and have staff to handle the sales process, but in an adoption-led market you need to participate in communities so you can help users become customers.

- In a procurement-led market you need shiny features and great demos, whereas in an adoption-led market you need software that is alive, evolving and responsive to feedback.

- In an adoption-led market you need support for older hardware and platforms because adopters will use what works on what they already have.

- Adoption-led users self-support in the community until they deploy (and maybe afterwards if the project is still “beta”) so withholding all support as a paid service can be counter-productive.

To me the change from a faith based procurement process to a more agile functionality driven approach is at the basis of SaaS attractiveness. A small business can do an evaluation in 15 minutes and get a sense if the software is going to solve a problem. The on-demand test drive facility to me is the great break through and I find myself looking for that facility in all software evaluations now.

Observing my own behavior, building a SaaS business centers on this adoption-led approach. A potential client is looking for an easy to use trail capability either as an open source package like MySQL or as a trail test drive like Bungee. Ease-of-use is the differentiator here since most evaluations would be opportunistic: if you can't impress your customer in the time it takes to drink a cup of coffee you may have lost that customer for ever.

Friday, July 18, 2008

SaaS economics

Complex server applications have typically so many configuration hooks that application deployment is not easily automated. This implies that bringing up and shutting down applications is not the same as what we are used to on the desktop. Applications become tightly coupled to physical host configuration, internal IT processes, and the prowess of the admin. Billy blames this on OSFAGPOS, or On Size Fits All General Purpose Operating Systems. OSFAGPOS is deployed in unison with the physical host because the OS integrates drivers that enable access to the physical hosts's hardware resources. A RAID or network stack can be configured to improve performance for specific application attributes, and it is this separation of roles that creates the root of all evil. rPath's vision is JeOS (read "juice"), or Just Enough OS that packs all the meta data needed for the release engineering process to do the configuration automatically. This would make application startup fast, cheap, and reliable.

Here is an economic reason why new SaaS providers will siphon off a portion of the software universe. A typical ISV spends between 25 and 40% of its engineering and customer support expense on peripheral functionality such as installers, cross-platform portability, and system configuration. Since a SaaS provider solves these issues through a different business model it frees up a significant portion of the development budget to work on core application features.

In my mind, the cross platform aspect is not as clear cut as Billy makes it appear. Whereas for an ISV the economic lock-in limits the TAM for its application, for an SaaS provider it can cut both ways. If the SaaS provider caters to customer for which high availability is important selecting gear from IBM or Sun might create a competitive advantage AND credibility. But if the SaaS provider caters to customers for which cost is most important, selecting gear from Microsoft/Intel/AMD might be the better choice. The hardware platforms have different cost structures and if a SaaS provider wants to straddle both customer groups they still need some form of cross-platform portability.

Wednesday, July 9, 2008

Virtualization and Cloud Computing

- Create compute clouds

- Flexibly combine VM deployment with job deployment on a site configured to manage jobs using a batch scheduler

- Deploy one-click, auto-configuring virtual clusters

- Interface to Amazon EC2 resources

My experience with virtual clusters on EC2 has not been positive, so I am very interested to see if the Globus approach can deliver. The idea to have the ability to allocate a cluster in the background when I want to run a MPI application is just too appealing to give up on.

Friday, June 27, 2008

Grid versus Cloud Computing

The typical use of a cloud is information driven. Assuming Google as the quintessential cloud computing environment, the user is looking for information, and Google's programs have done their job in the past by taking in raw data and organizing it so that the user can find contextual information. Inside Google, scripts are organizing the schedules for launching the programs that crawl the web, compute the index, and update the production index.

I just reread Tom White's post: Running Hadoop MapReduce on Amazon EC2 and Amazon which is a great example of all the steps needed to get a service running on a cloud. Once Hadoop is running and we periodically pick up the web log from S3 we would have a cloud for that particular task. The actual usage case of analyzing a web log would be much simpler when executed on a grid because the grid would automatically start and stop the services needed on our behalf. However, keeping the services running 24/7 and interacting with them through a web interface is more of a cloud computing workflow and that is the way start-ups are using AWS.

Tuesday, June 24, 2008

Data is the differentiator

The fundamental properties about the data that you need to answer are:

1- security and privacy

2- size

3- location

4- format

Security and Privacy

This should be the starting point since it affects your liability. The current innovators of cloud computing (financial institutions, Google, Amazon) are global organizations with geographically dispersed operations. The business operation of one time zone should be visible to other time zones so these organizations had to solve security and compliance to local privacy laws. Clearly, this has come at a significant cost. However, nascent market for cloud computing resources in the form of Amazon Web Services make it possible for start-ups to play in this new market. These start-ups clearly play a different game and their services tend to have very low security or privacy needs, which allows them to harbor a very disruptive technology. These start-ups will develop low-cost services that will provide powerful competition to EDS and other high-security, high-privacy outsourcers. They will not compete with them directly, and they will expand the market with a lower cost alternative: two prime ingredients for disruptive technology.

Data Size

Data size is the next most important attribute. If your data is large, say a historical snapshot of the World Wide Web itself, you need to store and maintain Petabytes of data. This clearly is a different requirement than if you just want to provide access to a million row OLAP database. Size affects economics and algorithms and it also can complicate the next attribute, location.

Data Location

The location of the data will affect what you can do with it. If the data size is very large, the time or economics of uploading/downloading the data set to a commercial cloud resource provider may be prohibitive. In case of the historical web snapshots, it is much better to generate the data in the cloud itself: that is, the data is created by the compute function you execute in the cloud. For the web index, this would be the set of crawlers that collect the web snapshot. There are readily available AMIs for Hadoop/Lucene/Nutch that enable a modest web indexing service using AWS.

Data Format

The data format affects the details how to use the data. For example, if you have your data in an OLAP database you will need to have that OLAP database running in your process. Similarly, if you have complex data such as product geometry data on which you want to compute stress or vibrational analysis, you will need access to the geometry kernel used to describe the data. Finally, the data format affects the efficiency with which you can access and compute on your data. This is frequently an underestimated aspect of cloud computing but it can have significant economic impact if you pay as you go for storage and computes.

Friday, June 20, 2008

Web Server as the smallest unit of Cloud Computing

The data managed by the web server is the source of differentiation. As a user I am looking for valuable or entertaining information. And I am willing to part with money, or time, to find it. This is the driving force behind any business value proposition for cloud computing. Interestingly enough, due to the vast alternatives available, price elasticity is extraordinarely discrete: we are willing to consume indiscriminately if it is free, but if we need to part with money it suddenly becomes a more emotional/rational activity. This explains the popularity of services supported by advertising: human beings are willing to tolerate some degree of SPAM as long as it allows them to consume other information for free.

The consumption of information requires some client device and clearly there are some computes taking place in the client as well. For example, watching a YouTube clip on your smartphone requires some decent performance to decompress and decode the video stream. Universal information convergence is therefore not possible in my mind. The characteristics and usage models of a CAVE are fundamentally different from the characteristics and usage models of a smartphone. There is nothing that can chance that. The clouds that serve up the converged information will therefore have to make a selection of the clients that are appropriate for its information consumption.

What is interesting to me is that the original World Wide Web vision of Sir Timothy John Berners-Lee is effectively cloud computing. Universal access of information among geographically dispersed teams was the impetus to the wold wide web. Driven by business, we are now getting to a vocabulary that places that concept into the consumer space. Continued innovation by businesses to generate and extract value will push more and more computing behind the generation of information, Concurrently, the marketing departments will continue to obfuscate what is fundamentally a very easy to understand and desirable concept: a flat and universally accessable information world.

Thursday, June 19, 2008

Federated Clouds

So in a nutshell, clouds organize data to create value in terms of targeted information that can be sold or auctioned, such as advertising keywords, or the best price on an airline ticket. However, information truly is unbounded, and thus there will be many specialized clouds to add very specific value to specific raw data sets. For example, the data maintained in Amazon's cloud and its computational processes to create a searchable book store are very different compared to Google's Web Service or NASDAQ's Data Store.

Given the fact that not even Google can encapsulate all knowledge, diversity of information will lead to a commercially motivated federated system of clouds. Each cloud has its own optimized data organization capability to generate valuable information for profit. Amazon and Google will have opportunities for innovation that nobody else has due to their scale, but peripheral innovation will occur through aggregation, or mashups, of data residing in different clouds, thus creating a federation of clouds.

As far as the starting premise of what makes a cloud a cloud: convergence of information, the federation of clouds continues this premise, and thus can be seen again as a cloud.

Riches for SaaS providers

To generate the necessary economies of scale, SaaS is by necessity multi-tenant. Secondly, the dynamics of business haven't changed so SaaS providers need to race to critical mass in terms of installed customer base to continue to be relevant and generate free cash flow to drive continued innovation and roll out new functionality. Only the largest SaaS providers in a vertical will survive.

This creates the next monetization option for SaaS providers: business intelligence and benchmarking. The SaaS provider has brought together a wealth of companies all using the same business process codified in the SaaS functionality. Mining this data for trends and operational business metrics is just a small step. Global competition forced big companies to develop these business intelligence processes and they had the operational scale necessary. Small and medium business operation had to be aggregated to be able to generate this opportunity and this will be many times over more valuable than the SaaS functionality that the SaaS provider started with.

Wednesday, June 18, 2008

Cloud Computing Lingo

Grid Computing

Grid Computing is a collaboration model. Locally managed resources are virtualized and aggregated in a larger, more capable resource. Grid computing is concerned with coordinating problem solving in virtual organizations and typically is associated with large and complex "Grand Challenge" problems.In the scientific community, multi-institutional collaboration is required to have any hope of solving fundamental questions as they arise in high-energy physics, fusion or climate research. It is in this community that the world wide web originated to fulfill the need for seamless document access among geographically dispersed team members, and it is also the birthplace of the grid. In 1990 the first HTML communication took place at CERN, and in 1994 the first grid was put together around the Supercomputing conference as a mechanism for all participants to share data and models. It was dubbed I-WAY at that time but it was the starting point of research to try to find solutions for security, resource management, job control, and data caching that are central to grid computing.

Examples are: Terra GRID, Euro GRID

Haas, or Hosting as a Service

HaaS is a business service model. There are a lot of activities in modern business that are not core operational differentiators. These essential but peripheral services are better outsourced to specialists who can leverage economies of scale. Payroll management, shipping, and web presence are three examples of services that tend to be outsourced for most modern businesss, particularly small and medium sized businesses (SMBs).Examples are: Startlogic, Hostmonster, Rackspace

SaaS, or Software as a Service

SaaS is a software deployment model. Application functionality is provided to the user through a web interface and the SaaS provider manages hardware and software operation and maintenance.Examples: Salesforce.com, Webex, Netsuite

SaaS is generally associated with business software and marketed as a service to lower the cost of internally managed software. SaaS allows customers to lower the initial cost of software licenses and computer hardware to run on.

- Web Site Hosting and Web Application Hosting Services are probably the most ubiquitous instances of the SaaS model

- Customer Resource Management, or CRM, has many different instances, for example Salesforce.com, Siebel, or Coghead

- Completely integrated Enterprise Resource Management systems are provided by SAP, Oracle, Netsuite, Epicor, or Infor

Commercially, SaaS has carved out many different useful services. Unfortunately, this has lead to a fragmentation of the market with the associated interoperability and economic lock-in problems. When selecting a SaaS provider the overriding question should be if you can move your data to other providers or bring it in house. SaaS becomes less interesting at a larger scale or if you want to extract business intelligence from your data. Plan for success but manage for failure. If the SaaS provider does not have a productive mechanism to get all the data out of the service, think twice before signing a contract.

PaaS, or Platform as a Service

PaaS is a software life-cycle model. Applications are developed, tested, deployed, hosted, and maintained on the same integrated platform.Examples: Bungee Lab Connect, Comrange AppProducer

Web Services

Web Services represent anything that serves data, information, or access through a web browser. This is such a nebulous group of functionality that this term is more confusing then it is helpful. For example, Amazon's EC-2 is billed as Amazon Web Services, but really AWS rents you an appliance on which you can install your own machine image. That appliance can now do anything, from web site serving, to application serving, to data mining, to web indexing, to running your OpenOffice spreadsheet model. Google web services aggregate anything from email to calendaring to picture storage and of course web indexing, but it is distinctly different from Amazon's web services.Web 2.0

Web 2.0 refers to the proposed second generation of Internet-based services where data services such as social networks, blogs, and wiki's are connected and add value to each other. Collaboration is central to this model and users generate information and police themselves.Welcome

Cloud Computing has as many interpretation as there are users, but there is one common thread among all cloud computing models and that is the convergence of information access. The 'Cloud' holds your information, the 'Cloud' may even compute on your information, and for real value, the 'Cloud' may combine other sources of information to make your data, or information, more valuable.

This blog takes the distinct position to reason about clouds from the user's productivity perspective. Enjoy.