I found two papers that report on experiments that take Amazon EC-2 as IT fabric and deploy compute intensive workloads on them. They compare these results to the performance obtained from on-premise clusters that include best-known practices for compute intensive workloads. The first paper uses the NAS benchmarks to get a broad sampling of high-performance computing workloads on the EC-2 cloud. They use the high-performance instances of Amazon and compare them to similar processor gear in a cluster at NCSA. The IT gear details are shown in the following table:

| EC-2 High-CPU Cluster | NCSA Cluster | |

|---|---|---|

| Compute Node | 7GB memory, 4 cores per socket, 2 sockets per server, 2.33GHz Xeon, 1600GB storage | 8GB memory, 4 cores per socket, 2 sockets per server, 2.33GHz Xeon, 73GB storage |

| Network Interconnect | Specific Interconnect technology unknown | Infiniband network |

The NAS Parallel Benchmarks are a widely used set of programs designed to evaluate the performance of high performance computing systems. The suite mimics critical computation and data movement patterns important for compute intensive workloads.

Clearly, when the workload is confined to a single server the difference between the two compute environments is limited to the virtualization technology used and effective available memory. In this experiment the difference between Amazon EC-2 and a best-known practice cluster is between 10-20% in favor of a non-virtualized server.

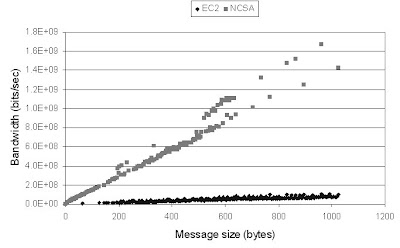

However, when the workload needs to reach across the network to other servers to complete its task the performance difference is striking, as is shown in the following figure.

Figure 1: NPB-MPI runtimes on 32 cores (= 4 dual socketed servers)

The performance difference ranges from 2x to 10x in favor of a optimized high-performance cluster. Effectively, Amazon is ten times more expensive to operate than if you had your own optimized equipment.

The second paper talks to the cost adder of using cloud computing IT infrastructure for compute intensive workloads. In this experiment, they use a common workload to measure the performance of a supercomputer, HPL, which is an acronym for High Performance LINPACK. HPL is relatively kind to a cluster in the sense that it does not tax the interconnect bandwidth/latency much as compared to other compute intensive workloads such as optimization, information retrieval, or web indexing. The experiment measures the average floating point operations (FLOPS) obtained divided by the average compute time used. This experiments shows an exponential decrease in performance with respect to dollar cost of the clusters. This implies that if we double the cluster size the FLOPS/sec for money spent does down.

The first paper has a wonderful graph that explains what is causing this weak scaling result.

This figure shows the bisection bandwidth of the Amazon EC-2 cluster and that of a best-known practice HPC cluster. Bisection bandwidth is the bandwidth between two equal parts of a cluster. It is a measure how well-connected all the servers are to one another. The focus of typical clouds to provide a productive and high margin service pushes them into IT architectures that do not favor interconnect bandwidth between servers. Many clouds are commodity servers connected to a SAN and the bandwidth is allocated to that path, not to bandwidth between servers. And that is opposite to what high performance clusters for compute intensive workloads have evolved to.

This means that for the enterprise class problems, were efficiency of IT equipment is a differentiator to solve the problems at hand, cloud IT infrastructure solutions are not well matched yet. However, for SMBs that are seeking mostly elasticity and on-demand use, cloud solutions still work since there are still monetary benefits to be extracted from deploying compute intensive workloads on Amazon or other clouds.

![Validate my Atom 1.0 feed [Valid Atom 1.0]](http://stillwater-sc.com/valid-atom.png)

2 comments:

Nice post... as neat as a police procedural. The interesting question to me is whether the inter-server bandwidth limitations restult from immaturity of the market (not enough cloud apps really need this), or whether there's a fundamental economic/technological reason it will be harder for clouds to address this than private clusters.

I believe it to be the (im)maturity of the cloud computing market. My prediction is that the cloud space will specialize with some clouds running on Tier IV data centers for high availability, others organizing and optimizing for data mining (see Cloudera), others optimizing for video storage, etc. As on-premise data center IT teams have realized that you cannot be everything to all, the economics of cloud computing will force specialization of the cloud services. You simply cannot provide a $0.10/cpu hour access to a HPC oriented cluster and expect to make any money. Given the fact that Google Squared and Wolfram Alpha are blazing a HPC trail for the semantic web, I fully expect that specialized clouds for such workloads will be able to generate intangible value that can be monetized handsomely.

Post a Comment